Developing secure application is critical to an organization’s reputation and operational efficiency. The effect of compromised applications resulting in inability to serve the community or data breaches of student and staff information can bring an organization to headline news with bad publicity, losing user confidence and even worse law suits of data privacy breaches. While application development teams are confronted with excessive functional requirements and enhancements under tight time pressure, latefound security vulnerabilities in application would be costly for an organization to address and fix.

Security should be built as an integral part of the application development framework from the beginning during user requirement until the stage of testing and assurance review. All changes should also include a security risk assessment to ensure enhanced software modules would not introduce security weaknesses.

This newsletter portrays the need and strategy of enhancing the present stance of application development by incorporating the necessary processes in order to establish a secure development life cycle. Security vulnerabilities and application defects would be minimized with faster time to remediate.

Challenges of Secure Application Development

There are several key challenging issues in developing secure and reliable application. Developers are typically not trained on secure coding practices. As a result, they are not aware of the myriad ways of introducing security vulnerabilities into their codes. There are also misalignment issues between project team stakeholders and development team across the software development life cycle:

- Misaligned priorities - Development teams are asked to focus on coding to meet functional requirements in a timely manner. Non-functional requirements such as security are typically put as lower priority and even after-thought only when security incidents have occurred.

- Misaligned processes - Security testing only happens in the end stage of application development where vulnerabilities and codes errors issues are really costly to fix while developers are focused on meeting application release date.

- Misaligned capabilities - Developers lack the knowledge to securely code their programs and do not know how to use code review methods and tools to check for security weaknesses in their programs.

Since proper security testing and reviews are ignored and overlooked during the development life cycle, applications can end up with vulnerabilities down the road. Exploit and breaches of application vulnerabilities had been reported across industry’s vertical and geopolitical boundaries:

- In May 2011,2 Sony Music web sites suffered SQL Injection attacks by LulzSec organization. Sony believed SQL vulnerabilities were responsible for the attacks against Sony Plays Station Network and Qriocity that leaked the private data of 77 million users and led Sony to shut down the services for over a month. The overall breach cost Sony more than US$171 million.

- In February 2011,3 a cyber security consulting firm HBGary was attacked by the group Anonymous. SQL Injection vulnerability in the www.hbgaryfederal.com website, combined with poor cryptographic implementation, enabled Anonymous to extract the company officers’ usernames and passwords, which then led to a leak of sensitive information and confidential internal emails. CEO of HBGary Federal resigned from the company shortly thereafter.

- A cross-site scripting (XSS) vulnerability in Android Market was discovered in March 2011 that allowed attackers to remotely install apps onto user’s Android devices without their knowledge and consent.

|

“The Information Technology industry has a big problem - a 60 billion dollar problem, in fact 60 billion dollars is what global IT industry spends on security in one year. That’s more than gross domestic product of 2/3 of the countries in the world. Every week there’s new report of data breach, email address are sold to spammers and stolen credit card information. If IT industry spending so much in security, why they still got hacked? – Answer is they spending money, but they spending in wrong things.” - Forbes

|

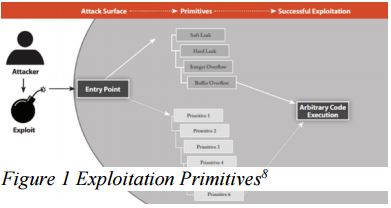

Primitives of Application Security Flaw

First primitive technique used is the soft leak,4 which allows RCE program to manipulate memory in the targeted application without any trace or security repercussions. These would happen towards most common application extension or program which has valid program functionality. For example, a web application server, by design, will accept HTTP requests from a client. The client will send information which is held until session termination occurs. By understanding the mechanics of how requests and sessions work, the attacker may write RCE exploits targeting certain memory layout of a particular application.

The second primitive security flaw is the hard leak.5 The hard leak, or resource leak, is quite familiar to most C/C++ programmers. The leak occurs when the developers forget to free memory that is acquired dynamically during runtime. While most developers think of this as a quality problem that will result in massive memory overflow at worst, many attackers see this as an opportunity to exploit stability and burst the temp filesys. By acquiring memory permanently, an attacker can retain connectivity in certain portions of memory that is never subsequently used throughout the lifetime of a process.

|

“Get some form of security training for everyone involved in application development project at least once a year. This includes not only coders and testers but also engineering and program managers as well” - OWASP

|

The third primitive involves integer overflow.6 This refers to mathematical operation attempting to store a number larger than an integer can hold and then the excess is lost. The loss of the excess data is sometimes referred to as an integer “wrap”. For example, an unsigned 32-bit integer can hold a maximum positive value. By adding 1 to that maximum positive value, the integer will start counting again at zero (UINT_MAX + 1 == 0). A real world example is the odometer of a car rolling over after 1 million miles; re-starting its mileage count from zero. By using this buffer overflow in memory allocation routine, an attacker can allocate less memory towards file sys intended.

Finally, the last primitive is about buffer overflow.7 This is a common kind of vulnerability found in firmware based on assembly programs (e.g. C/C++ programs). A buffer overflow is caused when the program writes past the end of a buffer, which causing corruption of adjacent programs memory. In some cases, this will automatically execute the overwritten content of a stack or heap that allows an attacker to exploit the normal operation of the system and, ultimately, take over the flow of control from the program.

As illustrated below, by limiting the number of primitives within codes, developers can make the process of exploiting application much more difficult, thereby increasing the cost of exploitation.

|

“Threat modelling is a great way to find potentially deep designlevel vulnerabilities earlier in application development process, when they’re much less expensive to fix. When focus on Security Development Lifecycle with Threat Modelling capabilities, focus in attention on those kind of design vulnerabilities, and don’t dragged down into weeds trying to identify every possible crossscripting vulnerabilities” - Microsoft

|

Common Application Security Vulnerabilities

Besides understanding the primitives of exploiting application, it is also important for developers to take note of the following common application security vulnerabilities:

Arbitrary code - Through arbitrary code execution, an attacker may acquire control of a target system through buffer overflow vulnerability, thereby gaining the power to execute commands application functions at will. These types of exploits take advantage of application bugs that allows the RCE towards operating systems and injects shellcode to allow the attacker to run arbitrary commands on another’s computer. Once this is accomplished, the attacker will proceed to escalate privileges, which enables it to be used to perform various malicious tasks, including spreading email spam and launching denial-of-service attacks.

Data loss: This class of security vulnerability results in sensitive data loss due to corruption, modification, or theft of data. Data loss features prominently in the 2011 CWE/SANS in Top 25 Most Dangerous Application Errors list.

9

Security bypass: An attacker could leverage application vulnerabilities to exploit and bypass authorization and authentication modules. Vulnerabilities in application where filenames are unsafely checked before being used leading to unauthorized access to resources in extreme cases.

Denial of service: This class of vulnerability may lead to application slowing down or failing to respond. An application can crash with unintended errors, thus preventing from legitimate use.

Loss of integrity: Integrity is defined as the expectation of reliability in behaviour and performance of application processes. Integrity of application is compromised when expression evaluation that do not meet common criteria standards leading to side effects, misuse or mixing of data types. These vulnerabilities may result in inadvertent errors in program code and not easily fixed.

|

“Be sure to have a security incident response plan in place before push application into production. Development team do not want to be caught off-guard without a plan when hackers launch new zero-day attack against organization in middle of the night on long holiday weekend” – BH Consulting

|

Strategic Approach to Application Security

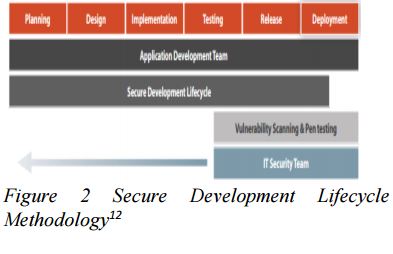

In encounter these security flaws, secure development lifecycle (SDL)10 specific model for development team to perform over the course of their software development lifecycle. SDL is based on waterfall-style development methodology in which there are distinct development lifecycle phases.

Training, policy and organizational capabilities - A serial of Intensive training for application development teams in the basics of secure coding and ensure they stay informed of the latest trends in security issues and vulnerabilities

Planning and design - Implement STRIDE MODEL

11consist of threat and vulnerabilities in the initial design of new applications and features which permits the integration of secure way that minimizes disruptions to plans and schedules in-time.

Implementation - Avoid coding issues that could lead to vulnerabilities and leverages SDL tools to assist in building more secure & reliable application. Secure coding guidelines or baseline should be established to guide the developers how to code securely (e.g. perform input and output sanity checks, enforce strong authentication and session management, avoid insecure object references, enhance error routine handling, etc.)

Verification and testing - Perform series of security tests which should be defined during the planning and design stage. Examples of tests include code review, penetration test, load and stress test, and security functional tests to ensure the application is functionally working and secure as designed.

Release and response - Security incident response plan and mitigation process to address new threats that emerge over time.

|

“While source analyser is helpful, no tools is perfect, and static analyser tend to be “trigger-happy” when it comes to reporting potential vulnerabilities. If a project team have the extra budget, they might also consider using thirdparty services to triage scan results and weed out any false positive” – Microsoft

|

Best Practices

The following summarizes some best practices of secure application development which Universities can consider to build and integrate into their application development programs:

Bring business risk management into application development – Because security vulnerabilities can impact an organization’s reputations and business objectives, development team executives must rethink development practices and mandate a mature process such as SDL which is capable of delivering quality and security assurance

Develop secure coding guideline – To ensure coding consistency and best security practices applied, a secure coding guideline should be developed so that developers are trained to follow. Quality assurance checkpoints should also be established at various development stages so as to check whether the secure coding guidelines have been followed.

Push security tests earlier in development stage – Security tests should never be performed after the application goes into production. Minimum is to perform security tests during user and system acceptance tests. Even better, security tests should be shifted upstream of the development stage to take place during unit tests.

Testing and assurance – Different types of tests (e.g. code review, penetration test, load and stress test, security functional tests) should be defined with sufficient time allocated to be executed at different stages of application development life cycle. Besides testing before production, some tests such as penetration tests should be carried out whenever there is a major release, and even regularly after production because the threat landscape and new exploits will continue to evolve.

Apply the same to third-party supplied software – Third-party codes are often used in development projects. The same security testing methods (e.g. code review, penetration test) should be applied to these third-party codes and software the same way as one would do to in-house developed application.

Conclusion

Just because an application meets functional requirements does not necessary mean that the application is secure. Universities should consider enhancing their application development program to include secure development lifecycle which can offer more assurance that the resulting application will be secure from cyber attacks.

Vulnerabilities are an inevitable fact in application development. As a practical approach, application security testing will give developers and security team higher visibility into security risks early in the development process. This process serves as a common trust of reliable and measurable security testing methods such as code review and penetration test against constantly morphing security threats.

References

- 2012. IBM, “Five steps to achieve success in your application security program.”

- 2011. BBC News, “PlayStation outage caused by hacking attack.”

- 2011. KrebsonSecurity, “HBGary Federal Hacked by Anonymous.”

- 2007. Nicolas Waisman, “Understanding and bypassing Windows Heap Protection.”

- Same source at Reference “4”.

- Same source at Reference “4”.

- Same source at Reference “4”.

- Same source at Reference “4”.

- 2011, CWE/SANS “TOP 25 Most Dangerous Software Errors.”

- 2010. Simplified Implementation of the Microsoft SDL.

- 2002, “The STRIDE Threat Model.” https://msdn.microsoft.com/en-us/library/ee823878%28v=cs.20%29.aspx

- 2010. Diagram adapted from “Security and the Software Development Lifecycle: Secure at the Source”, Aberdeen Group.