The College is keen on fostering strategic partnerships with the social services sector to create a more cohesive and caring society. Over the years, our faculty members and students have developed research projects with NGOs in Hong Kong by applying available technology to enhance the delivery of social services. Below are some of the highlighted projects:

|

Project Title:

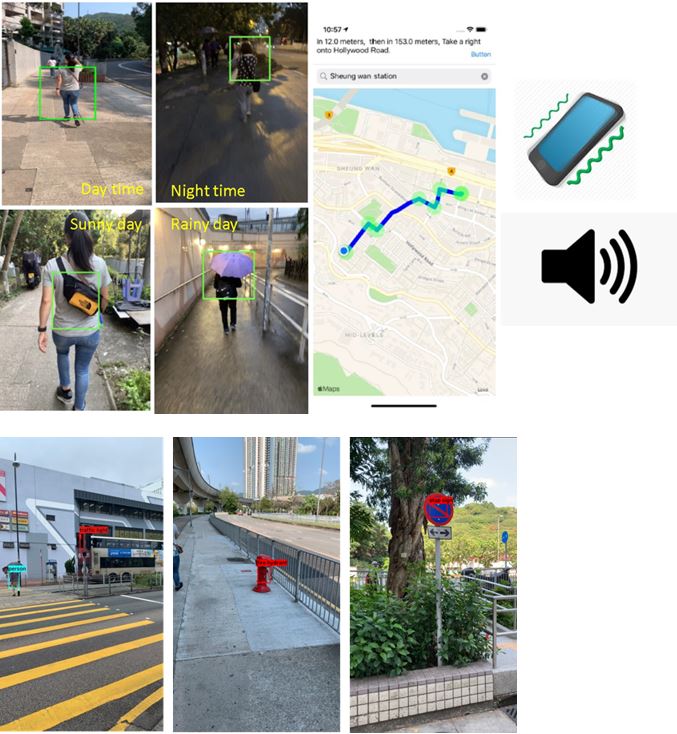

Navigator Based on Pedestrian Tracking and GPS for Visually Impaired People (iOS Application)

|

|

Supervisor: Dr Leanne Lai Hang Chan

Students: MingKei Mo

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

|

|

Project Description:

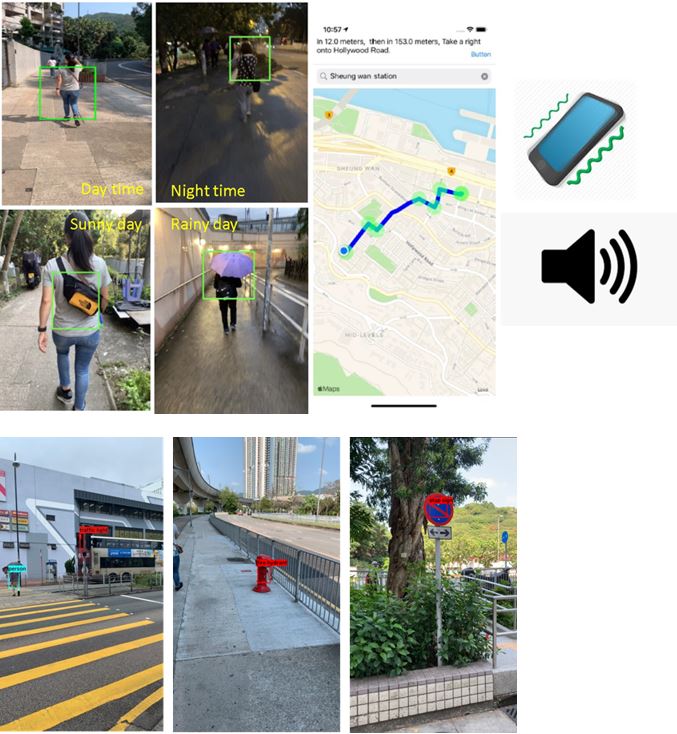

Purpose: Navigator Based on Pedestrian Tracking and GPS for Visually Impaired People (iOS Application)” (“The Navigator”) aims to offer a reliable guiding assistance for visually impaired people. Currently, visually impaired people always need guiding tools like tactile sticks or guide dogs when navigating outdoor. However, the number of guide dogs is limited and the tactile stick cannot provide accurate and informative feedback to the users. As technology advances, smart devices with AI technology can be combined and act as a new generation of guiding devices.

Method: The Navigator will use user’s GPS location to plan a route from user’s location to the destination, then the Navigator start uses the camera on the mobile device and an object tracking AI model to guide the user to follow pedestrian who is heading to the same destination. Whenever the pedestrian being followed is found not sharing the same destination as the user does, the Navigator will choose another pedestrian. Furthermore, the main feedback medium for guiding the users’ direction is haptic. Sound is only used when sending complicated or dangerous messages to the user.

Result: The Navigator is able plan a route from user’s location to destination, follow a pedestrian ahead of user and provide appropriate feedbacks to the user. Significance The Navigator integrated with advanced software technologies and a single hardware, the smart mobile device, can potentially provide a low cost temporary replacement for visually impaired people while they are waiting for their own guide dog. Therefore the Navigator may help visually impaired people utilize social resources and services more efficiently during their waiting time, hence better the inclusion of visually impaired people to our society.

GitHub link:

https://github.com/wireMuffin/Navigator-for-Visually-Impaired

More about the project:

https://youtu.be/wiPX2TXKQ1c

|

|

Project Title:

Localization in the MTR for the Visually Impaired by Deep Learning

|

|

Supervisor: Dr Kelvin Yuen Shiu Yin

Students: Tsz Kin Chau

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

|

|

Project Description:

In MTR station, some aids are provided for visually impaired people (VIP) to navigate there. However, it may not fulfill all the needs for the VIP because of the complicated structure of the stations.

Considering this problem, we propose to use deep neural networks to train a model by Wi-Fi signals and develop an android app to help VIP locate their position and the facilities at the MTR platform. Several functions such as distance between the nearest elevator and user position would be provided in the apps with voice feedback.

Software / Hardware Available:

Android Application

Technology Available:

Deep neural networks

|

|

Project Title:

An App to help the Visually Impaired People to Read Music Sheets

|

|

Supervisor: Dr Kelvin Shiu Yin Yuen

Student: Ping Keung Shan

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

Technologies for the Elderly and Disabled people by Youths

|

|

Project Description:

This project aims to design an iOS App to help the Visually Impaired People (VIP) to read music sheets. At present, the VIPs need to convert music sheets to braille before they could read them which is very inconvenience and expensive. Through this application, the VIPs can read music sheets via VoiceOver, an inherent accessibility on iOS, when they touch the screen, as if they are reading paper music sheets in braille format.

Software / Hardware Available:

Prototype of an App

|

|

Project Title:

Dou Shou Qi for the Visually Impaired

|

|

Supervisor: Dr Kelvin Shiu Yin Yuen

Student: Hei Ching Poon

Department of Electrical Engineering

Partnered NGO:

Hong Kong Federation of the Blind

Jockey Club

|

|

Project Description:

This project aims to develop a new version of an iOS App which enables the Visually Impaired People (VIP) to play Dou Shou Qi (Jungle). When the VIPs touch a piece on the screen, the VoiceOver function on an iOS device will tell players the name and position of the piece. This enables the VIPs to play with others via the App.

Software / Hardware Available:

iOS application

Technology available:

VoiceOver on iOS

|

|

Project Title:

Chinese Chess Game App for the Visually Impaired

|

|

Supervisor: Dr Kelvin Shiu Yin Yuen

Student: Chi Pan Yiu

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

Hong Kong Federation of the Blind

|

|

Project Description:

This is an iOS Chinese Chess game App developed for visually impaired users (VIPs). It is a two-player game that enables VIPs to play over the internet using Apple GameKit framework; it also adds voice recognition to improve the gaming experience of the VIPs using Apple Speech framework. VIPs can use voice commands to play the game, such as locating a piece by voice control instead of tedious searching on the screen.

Software / Hardware Available:

iOS application

Technology available:

VoiceOver on iOS

More about the project: iOS app available in

https://apps.apple.com/hk/app/%E4%B8%AD%E5%9C%8B%E8%B1%A1%E6%A3%8B-%E7%9B%B2%E4%BA%BA%E8%BC%94%E5%8A%A9/id1506959366#?

|

|

Project Title:

An Electronic Guide Dog App for the Visually Impaired

|

|

Supervisor: Dr Kelvin Shiu Yin Yuen

Student: Min Yung

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

Hong Kong Federation of the Blind

|

|

Project Description:

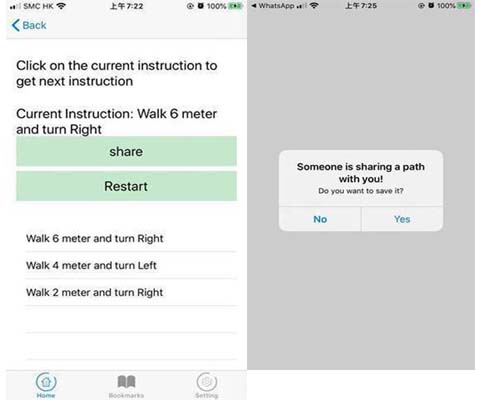

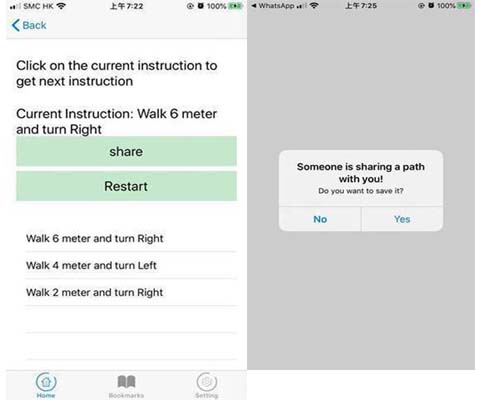

This project aims to develop an iOS App “Electronic Guide Dog” (EGD) which helps the Visually Impaired Person (VIP) to record the routes, helps the VIP to navigate every single straight path, as well as allowing people to share the recorded routes with others.

EGD does not rely on GPS which is inaccurate inside buildings, nor beacon technology that requires substantial installation and maintenance costs. With "EGD", the VIP can navigate by themselves easily even if they are heading to an unfamiliar destination.

More about the project: iOS app available in: https://apps.apple.com/hk/app/egd/id1511175896?l=en

|

|

Project Title:

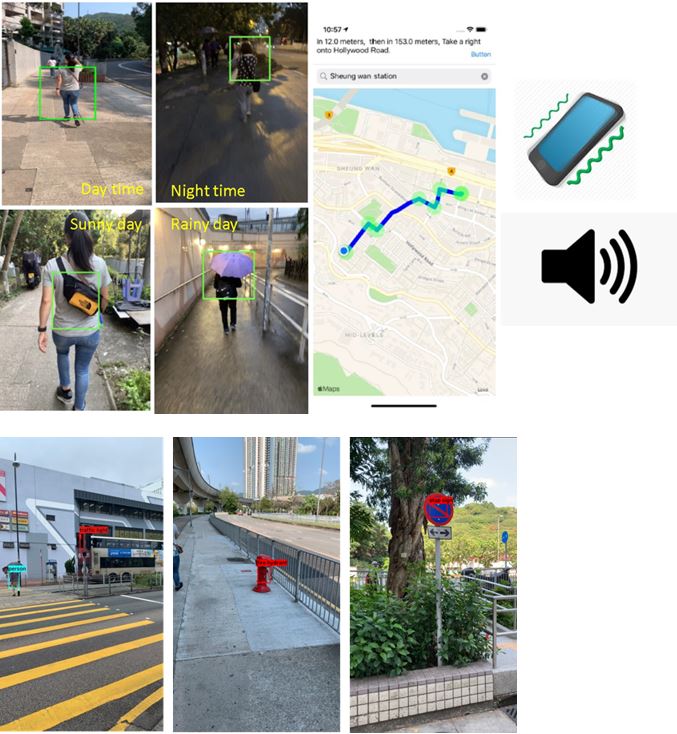

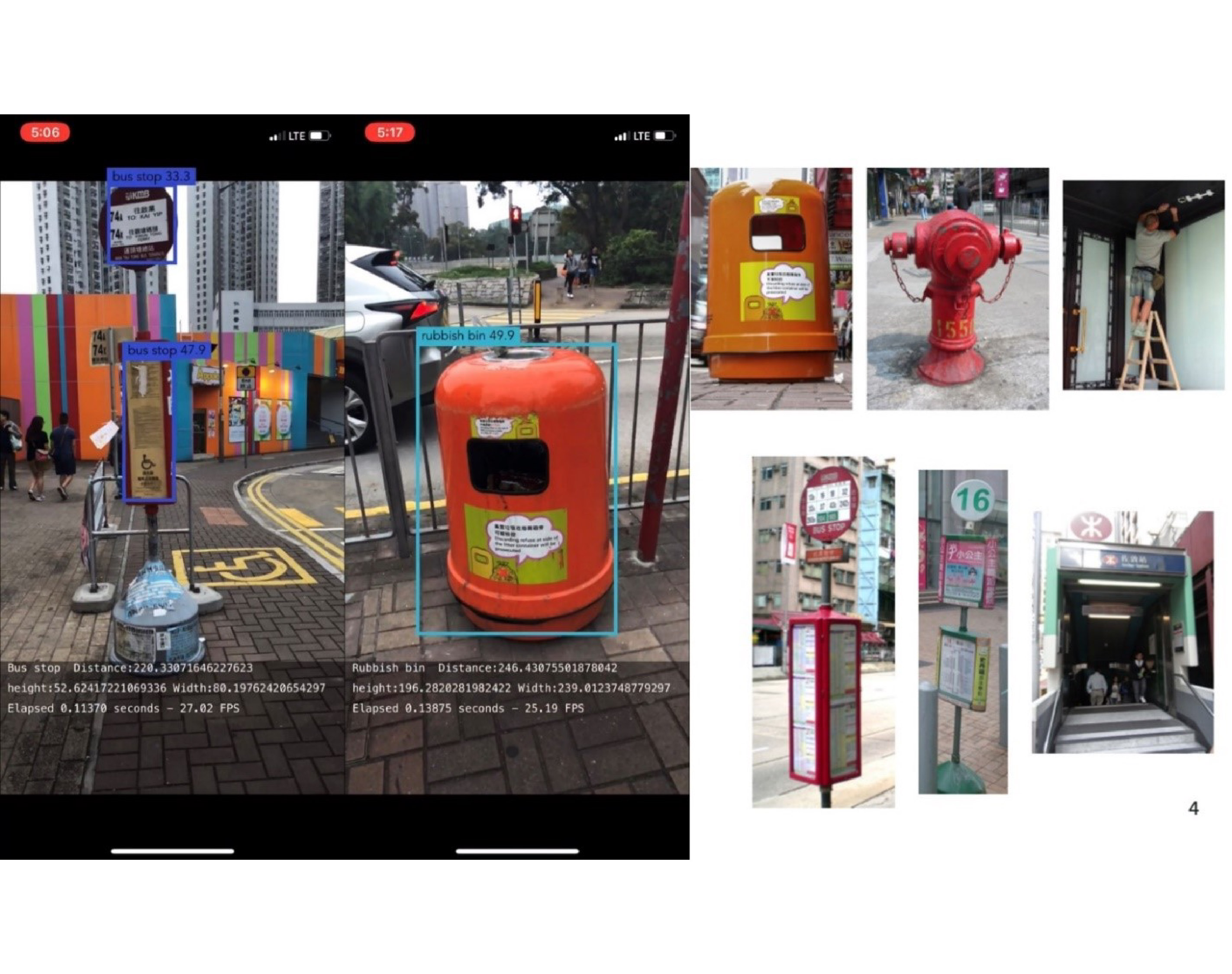

Real-time Outdoor Objects Recognition and Distance Detection for Visually Impaired People

|

|

Supervisor: Dr Leanne Lai Hang Chan

Student: Shing Fung Wong

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

|

|

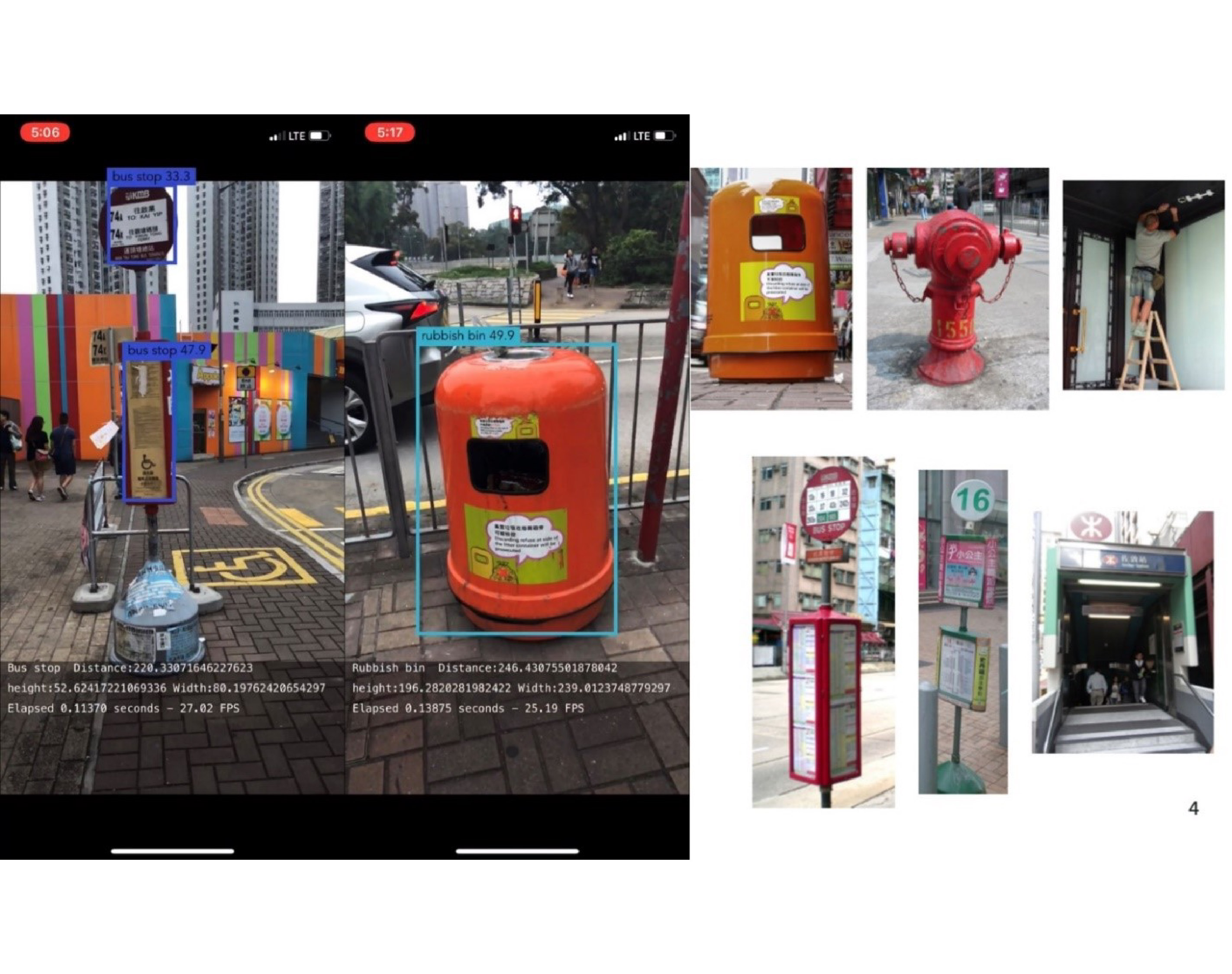

Project Description:

According to the World Health Organization, there are 257 millions of people with visual disabilities. Among them, 217 million have moderate to severe vision impairment and 36 million are totally blind. According to another study, low mobility is one of the major daily life problem encountered by the visually impaired. Walking on unfamiliar roads can be challenging and possibly dangerous for them. Currently, there are existing applications designed for helping the visually impaired. For example, Microsoft has employed image recognition technology in their Seeing AI application to identify different scenes, colors and emotions. Another application, TapTapSee, describe objects in a photo or short video from user’s smartphone camera. The application uses “CloudSight Image Recognition API” in the pre-processing stage hence the images are able to return correct description even if the picture was taken under narrowed angles or poor lighting conditions. However, majority of the existing application on smartphone are not designed for identifying outdoor objects, and their processing speed are quite slow due to the high latency of cloud computing, combined with issues such as lacking distance detection. The existing applications fail to provide timely notifications regarding the objects surrounding the individual. The objective of this project is to develop an offline smartphone application that performs real-time object recognition and distance detection on common outdoor objects. The application aims to create a low cost and real time application to minimize stress and the risk for visually impaired people when walking around unfamiliar locations.

Technology Available:

IOS Application "SeePath"

Other Information:

Awarded InfoTech Job Market Driven Scholarship 2019

More about the project: https://youtube/DbxMU4qoBZE

|

|

Project Title:

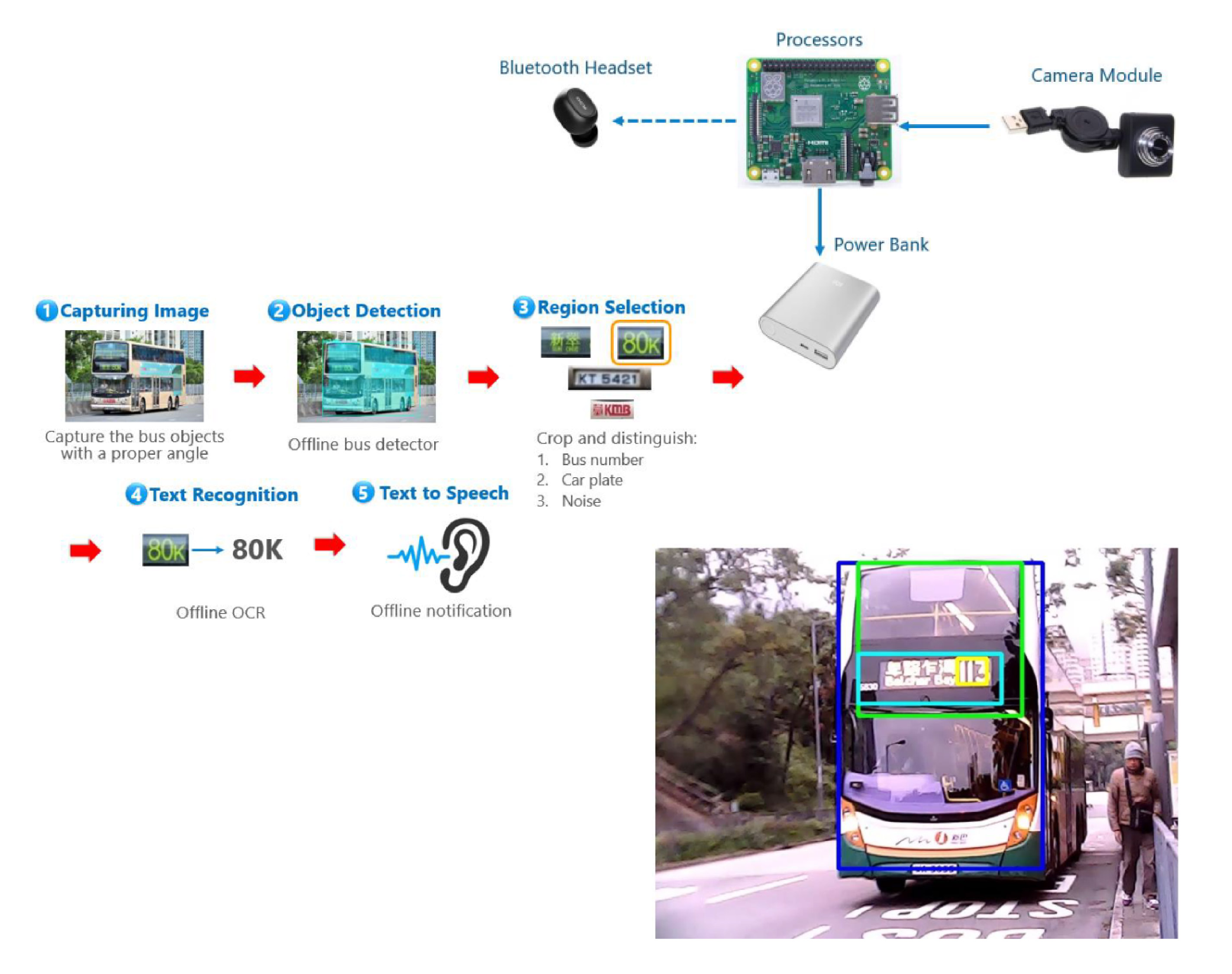

Digital Empowerment for the Visually Impaired

|

|

Supervisor: Dr Ray Chak Chung Cheung

Student: Wing Yin Yu

Department of Electrical Engineering

Partnered NGO:

Hong Kong Federation of the Blind

|

|

Project Description:

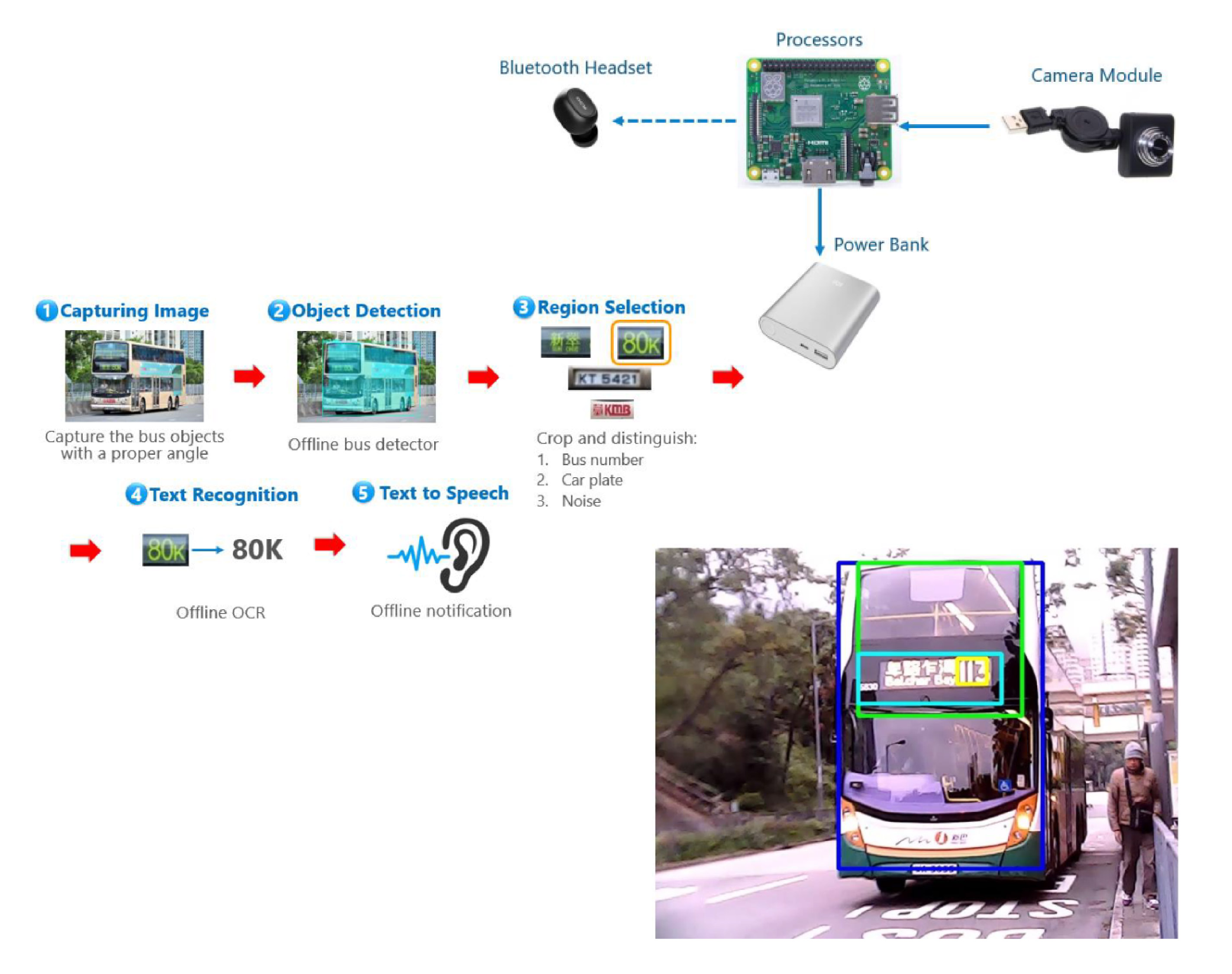

The main objective of this project is to develop a portable device that help the visually impaired people recognize bus number in real time. This device can assist the VIPs to perform the following 3 functions:

- To recognize route label of in-coming double-decked buses in Hong Kong

- To notify the VIPs with a Bluetooth headset once buses are detected

- To operate in a hands-free manner (i.e.no holding is required)

Prize Obtained:

- ASM Technology Award 2019 - Outstanding Award

- CityU EE Project Competition 2019 - Bronze Prize

Software / Hardware Available:

Hardware: a Raspberry Pi 3+, a Bluetooth headset, a power bank, a web-camera

Technology Available:

Computer Vision, Object Detection, Segmentation

|

|

Project Title:

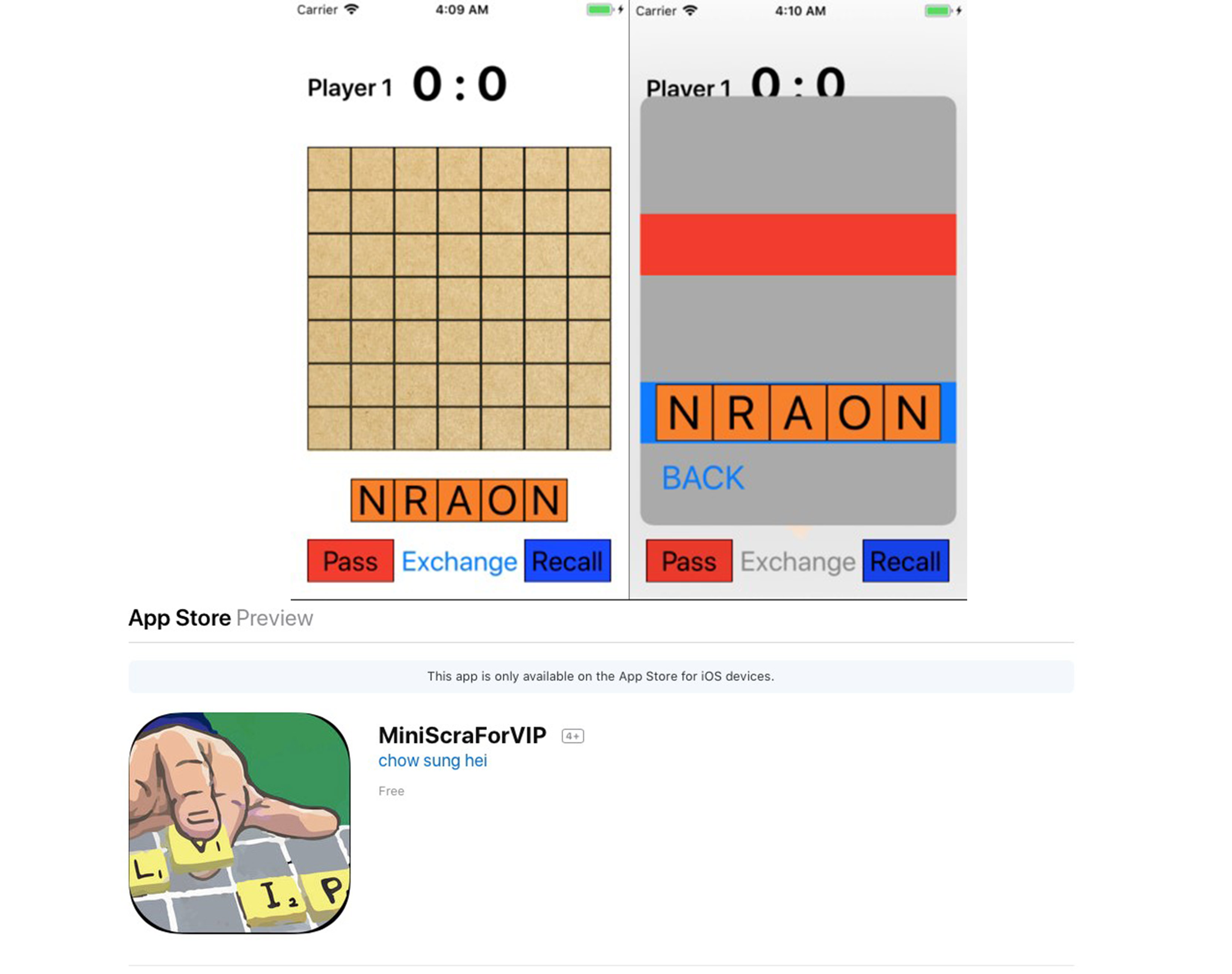

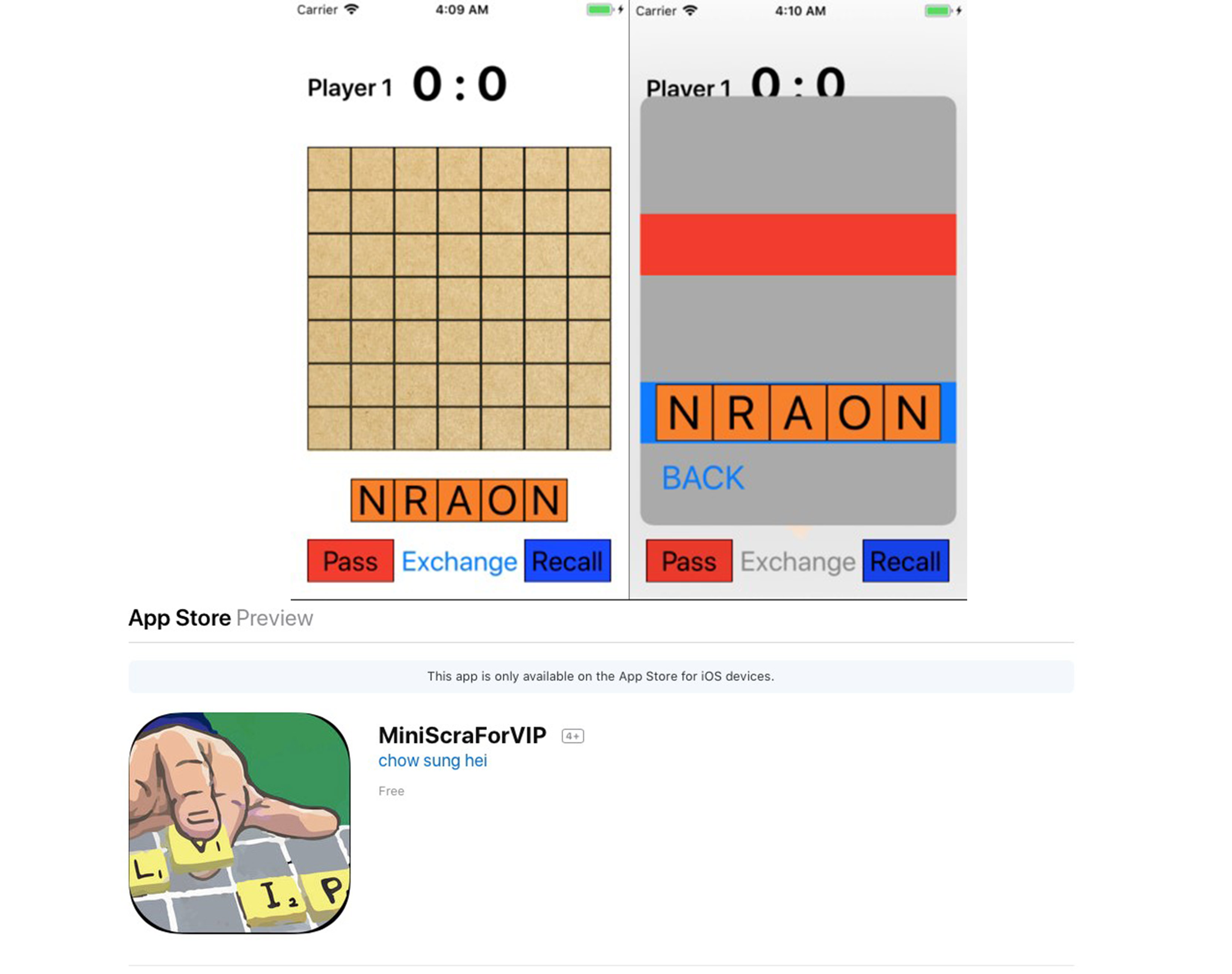

Game for VIP - Mini Scrabble on iOS

|

|

Dr Kelvin Shiu Yin Yuen

Department of Electrical Engineering

Partnered NGO:

Hong Kong Federation of the Blind

|

|

Project Description:

This project aims to present a board game, Scrabble, through the iOS with accessibility for the Visual Impaired People (VIP). The VIP can read the contents on the screen via VoiceOver, an inherent accessibility on iOS, when they touch the screen. They can also tap and flick the screen to select or move elements via VoiceOver Gestures. To a greater extend, the project aims to raise the awareness of developers about the needs of VIP on entertainment and add accessibility when developing iOS Apps.

Software / Hardware Available:

App on iOS

Technology Available:

VoiceOver on iOS

|

|

Project Title:

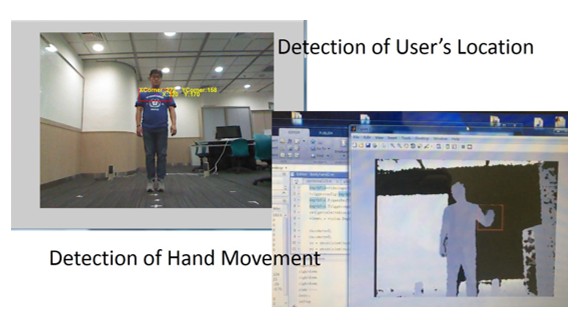

Design a Sport Game for the Visually Impaired

|

|

Supervisor: Dr Kelvin Shiu Yin Yuen

Student: Wing Sum Tang

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

Hong Kong Federation of the Blind

|

|

Project Description:

This project introduces a two-player computer tennis game for Visually Impaired People (VIP) to enjoy sports and physical activities. Several speaker-arrays will be set to indicate a tennis ball sound. Players have to reach the corresponding zone by identifying the sounded speaker. A camera will detect the player’s motion and determine whether the player is correct or not. Finally, the scores of each player and the winner will be announced.

GitHub link: https://github.com/charlietang075/Tennis-ball-game-for-VIP

Software / Hardware Available:

1 and 2-player tennis game for VIP

|

|

Project Title:

Wheelchair Battery Health Condition Monitoring Modules

|

|

Professor Henry Shu Hung Chung

Department of Electrical Engineering

Partnered NGO:

Direction Association for the Handicapped

|

|

Project Description:

This project aims to develop a diagnostic module for wheelchairs. The module can inform users the real-time state of charge and state of health of the batteries. Moreover, the module can tell users the distance that the batteries can support the wheelchair to travel.

|

|

Project Title:

Somewhere in Time Museum

|

|

Dr Charlie Qiuli Xue

Department of Architecture and Civil Engineering

Partnered NGO:

The Rotary Club of Hong Kong (Kowloon East)

|

|

Project Description:

The Rotary Club of Hong Kong (Kowloon East) is planning to build a small museum featuring collections of antiquity in Sai Kung. Led by Dr Charlie Xue, a team of architectural students from the City University of Hong Kong involved in the design of the museum. The team carried out preliminary site analysis and survey, and submitted a report to the Rotary Club in July 2018. All works were conducted on a voluntary basis in support of the community services by the Rotary Club. The project is on-going and it involves government departments such as Lands Department and Buildings Department. In this project, our students are able to apply their skills and knowledge outside of the classroom.

|

|

Project Title:

Developing an Aid to Help the Visually Impaired to Navigate at MTR Platform

|

|

Supervisor: Dr Kelvin Shiu Yin Yuen

Student: Ho Ming Ng

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

|

|

Project Description:

MTR is one of the major means of transport for the visually impaired people (VIP) in Hong Kong. However, many VIP find that navigating in MTR stations is challenging due to the irregular and complicated structure of the stations, especially for those stations which they seldom visit or have never visited before. Such difficulties could lengthen the travelling time of the VIP and cause inconvenience to their journey. In hopes of helping the VIP to navigate on MTR platforms, an aid utilizing the iBeacon and iPhone is therefore developed to provide them with useful information such as the user’s current location, the location of escalators, the distance to escalators, the exits which the escalator leads to, etc. With the VoiceOver function of iOS switched on and by tapping different parts of the screen, the user will be able to hear the corresponding auditory response reading out the information. This proof-of-concept aid has been field-tested on an MTR platform and is found to work well.

More about the project: https://www.youtube.com/watch?v=qPim_4rZqFE&feature=youtu.be

FYP report: http://dspace.cityu.edu.hk/handle/2031/8276

|

Project Title:

Two Chess Games Developed for the Visually Impaired |

|

Supervisor: Dr Kelvin Shiu Yin Yuen

Student: Ming Leong Lam

Department of Electrical Engineering

Partnered NGOs:

Hong Kong Blind Union

The Hong Kong Federation of the Blind

|

|

Project Description:

At present, the visually impaired people (VIP) play chess games using a special board and chess pieces. This project aimed to explore innovative ways to present the chess board positions so as to make the chess game experience even more enjoyable for the VIP. Two iOS chess games, “Tic-Tac-Toc” and “Dou Shou Qi”, are developed with accessibility. With the help of VoiceOver, VIP can easily know what is happening on the chess board and play with the user-friendly control.

|

Project Title:

Virtual Sports System for the Visually Impaired |

|

Dr Leanne Lai Hang Chan

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

|

|

Project Description:

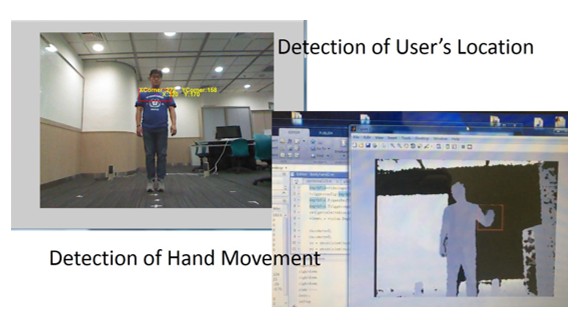

This project aimed to design a virtual sports system, providing a safe and effective exercise platform to the visually impaired, to increase their exercise compliance. The simulated tennis game was written in MATLAB, comprising of the Kinect system and four loudspeakers. Five game zones were designed for users to earn points. Users were asked to reach the corresponding zone after hearing a tennis serving sound generated by MATLAB via the loudspeakers. The Kinect will detect the hand movements of the users while users swing using a racket. Users could earn points by stepping into the correct zones generated by the speakers. Colour and depth sensors of Kinect were used to detect the shirt colour of the user. User’s location was analyzed by using the MATLAB Image Acquisition and Processing toolboxes. Sounds were generated in a randomized order to the loudspeakers and soundcards with their own speaker ID, and the Data Acquisition toolbox. Hand movement detection was achieved by the depth sensor to set the Body Posture Joint Indices of the right hand and made accessible as metadata on the data stream.

|

|

Project Title:

Scene Recognition Based Navigation System for the Blind

|

|

Dr Leanne Lai Hang Chan

Department of Electrical Engineering

Partnered NGO:

Hong Kong Blind Union

|

|

Project Description:

About 17,000 people in Hong Kong (2.4% of the total population) are visually impaired. Besides guide dogs and sticks, the indoor navigation relays on high-cost appendants, such as sensors, RFID and Wi-Fi. Convolutional Neural Network (CNN) has achieved impressive performance in object detection and scenes recognition. However, it is rarely applied in the field of indoor navigation for the visually impaired. This project intends to propose a CNN-based indoor scenes recognition system to improve mobility for the visually impaired.

6,100 images from Hong Kong Blind Union and online image dataset were collected to form the dataset. A CNN model based on AlexNet was optimized on this dataset and deployed in an android app. The resulted model achieves 85% accuracy in test dataset. Furthermore, the model’s behavior was studied through Class Activating Mapping technique, indicating how the model classifies the scenes based on discriminative image regions and spatial features. This study has proved that using CNN is a potential solution to recognize the indoor scenes, which could be applied to giving visually impaired people real-time navigation. Expansion of training dataset and combination with wearable devices could be done to further improve the performance.

|

|

Project Title:

CityU Apps Lab – Posture Check App

|

|

Supervisor: Dr Ray Chak Chung Cheung

Students: Tsz Wing Kwok & Debarun-Dhar

Department of Electrical Engineering

Partnered NGO:

The Chiropractic Doctors' Association of Hong Kong

|

|

Project Description:

This project serves to enhance the CityU Apps Lab's Posture Check mobile App by proposing a pipeline for the automatic detection of posture keypoints in the full-body front and side view images of human users. In the proposed method, the keypoint estimation is formulated as a regression problem which is solved using a deep learning approach.

The first half of this project is concerned with the development of a lightweight Convolutional Neural Network (CNN) architecture for human pose estimation on the FashionPose dataset. The model is shown to have comparable results with the current state-of-the-art. In the latter half, a new dataset of 900 images annotated with posture keypoints is used to adapt the pre-trained CNN to the new domain of posture keypoint estimation using transfer learning techniques. Two approaches to transfer learning are explored and evaluated. At the end the quantitative and qualitative results from the completed pipeline are presented. The final results of the pipeline demonstrate its high detection rate while maintaining a fast prediction speed and comparatively small memory footprint.

More about the project: Posture Check App

手機App助查脊骨

|